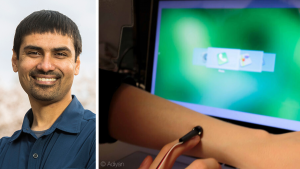

Shwetak Patel, the Washington Research Foundation Entrepreneurship Endowed Professor in the UW Department of Electrical & Computer Engineering and the Paul G. Allen School of Computer Science & Engineering, is part of an interdisciplinary team that has received the ISS 10-Year Impact Award for work involving on-body sensing. Photos courtesy of the Allen School

Adapted from an article by Kristin Osborne, Allen School

Nearly a decade ago, well before mixed reality and the metaverse went mainstream, a team of researchers from the University of Washington’s UbiComp Lab and Microsoft Research were making noise within the human-computer interaction community with a novel approach to on-body sensing. Their technique, which leveraged transdermal ultrasound signals, turned the human body into an input surface for pressure-aware continuous touch sensing and gesture recognition without requiring extensive, not to mention expensive, instrumentation.

Last week, that same team collected the 10-Year Impact Award from the Association for Computing Machinery’s Interactive Surfaces and Spaces Conference (ISS 2022) for ”significant impact on subsequent on-body and close-to-body sensing interaction techniques over the last decade within the SIGCHI and broader academic communities.” SIGCHI is the ACM’s Special Interest Group on Computer Human Interaction, which sponsors ISS.

The researchers originally presented the paper, “The Sound of Touch: On-body Touch and Gesture Sensing Based on Transdermal Ultrasound Propagation,” at the ITS 2013 conference — at that time, the “T” stood for “Tabletops,” as “Spaces” had not yet come into their own when it came to interaction research. The paper described a system for resonating low-frequency ultrasound signals across a person’s body using an inexpensive combination of off-the-shelf transmitters and receivers. As the person performs a touch-based gesture, the signal becomes attenuated in distinctive ways according to differences in bone structure, muscle mass and other factors, while distance from the transmitter and receiver and the amount of pressure exerted affect the signal amplitude. The system then measures these variations in the acquired signal and applies machine learning techniques to classify the location and type of interactions.

Although the set-up was simple — the proof of concept relied on a sole transmitter and receiver pairing — the technique was sufficiently robust to provide continuous detection and localization of pressure-sensitive touch along with classification of arm-grasping hand gestures for a variety of interaction scenarios.

“Our approach was unique in that it was the first time someone had applied transdermal ultrasound propagation to the problem of on-body sensing. It also was the first system capable of detecting both the onset and offset of a touch interaction,” recalled Shwetak Patel, who holds the Washington Research Foundation Entrepreneurship Professorship in the UW Department of Electrical & Computer Engineering and the Paul G. Allen School of Computer Science & Engineering. “We showed that our system could infer rich contextual information from a variety of interactions to support user input and information retrieval, from touch-and-click actions to slider-like controls.”

Patel and his collaborators evaluated two potential configurations of their system: a wearable transmitter and receiver pairing for pressure-sensitive, localized touch-based sensing and an armband that combined the two for gesture recognition. In a series of studies involving a total of 20 participants, they demonstrated the system could correctly classify a set of touch-based gestures along the forearm with roughly 98% accuracy, and more complex arm-grasping gestures with 86% accuracy just using baseline machine learning techniques without much in the way of fine tuning. Although the team’s experiments focused on the forearm, the transmitter and receiver could be positioned to enable touch-based sensing anywhere on the user’s body.

Desney Tan

Patel’s collaborators on the project include Allen School affiliate professor Desney Tan, currently vice president and managing director of Microsoft Health Futures, and his Microsoft colleague Dan Morris, now a research scientist with Google’s AI for Nature and Society program; lead author Adiyan Mujibiya, former University of Tokyo student and Microsoft Ph.D. Fellow who now heads the Tech Strategy Office at Yahoo Japan and Z Holdings Corp.; Jun Rekimoto, professor at the University of Tokyo; and Xiang Cao, former Microsoft researcher and director at Lenovo Research who is now a scientist at bilibili.

“The impact of this research continues to be felt within the industry-leading companies developing AR/VR and wearable technologies today,” said Patel. “It’s great to see the results of the long collaboration between our group and Desney’s team at MSR being recognized in this way.”

In addition to the 2013 ITS paper, that collaboration includes a project that turned the human body into an antenna by harnessing electromagnetic signals for wireless gesture control; an ultra-low power system for passively sensing human motion using static electric field sensing; and a system capable of classifying human gestures in the home by leveraging electromagnetic noise in place of instrumentation. Each of those papers earned a Best Paper Award or Best Paper Honorable Mention at the time of publication.

Read the 10-Year Impact Award-winning paper here.

Congratulations to Shwetak, Desney and the entire team!