Engineering research matters

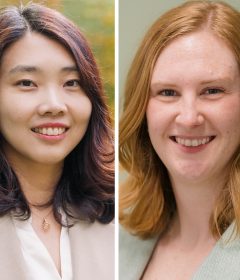

Learn about the impact and importance of research at the UW College of Engineering, including work by UW ECE assistant professors Jungwon Choi (left) and Kim Ingraham (right).

Learn about the impact and importance of research at the UW College of Engineering, including work by UW ECE assistant professors Jungwon Choi (left) and Kim Ingraham (right).

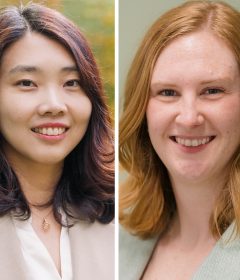

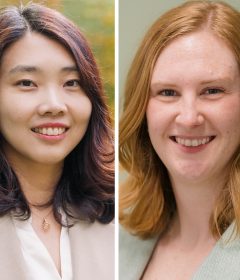

UW ECE doctoral students and Fellowship recipients Marziyeh Rezaei (left) and Pengyu Zeng (right) are conducting research aimed at enabling scalable, power-efficient optical links for the next generation of edge-cloud data centers supporting 6G infrastructure.

UW ECE offers our congratulations to the graduating Class of 2025. We wish you all the best for the future!

Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) and Devan Perkash (BSECE ‘25) will speak at this year’s Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m.

UW ECE assistant professor Yiyue Luo is developing smart clothing that can sense where a person is, know what movement is needed to perform a task, and provide physical cues to guide performance.

UW ECE alumna Thy Tran (BSEE ‘93) will be honored guest speaker for the 2025 UW ECE Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m.

Learn about the impact and importance of research at the UW College of Engineering, including work by UW ECE assistant professors Jungwon Choi (left) and Kim Ingraham (right).

UW ECE doctoral students and Fellowship recipients Marziyeh Rezaei (left) and Pengyu Zeng (right) are conducting research aimed at enabling scalable, power-efficient optical links for the next generation of edge-cloud data centers supporting 6G infrastructure.

UW ECE offers our congratulations to the graduating Class of 2025. We wish you all the best for the future!

Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) and Devan Perkash (BSECE ‘25) will speak at this year’s Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m.

UW ECE alumna Thy Tran (BSEE ‘93) will be honored guest speaker for the 2025 UW ECE Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m.

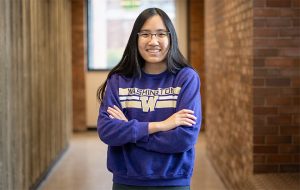

UW ECE undergraduate Mary Bun studies multitasking in fruit flies to offer valuable insights into disorders like Parkinson’s disease. Her work is inspired by neural engineering research led by UW ECE and UW Medicine Professor Chet Moritz.

920uweeViewNews Object

(

[_rendered:protected] => 1

[_classes:protected] => Array

(

[0] => view-block

[1] => block--spotlight-robust-news

)

[_finalHTML:protected] => https://www.engr.washington.edu/news/article/2025-06-23/engineering-research-matters

Engineering research matters

Learn about the impact and importance of research at the UW College of Engineering, including work by UW ECE assistant professors Jungwon Choi (left) and Kim Ingraham (right).

UW ECE graduate students receive Qualcomm Innovation Fellowship

UW ECE doctoral students and Fellowship recipients Marziyeh Rezaei (left) and Pengyu Zeng (right) are conducting research aimed at enabling scalable, power-efficient optical links for the next generation of edge-cloud data centers supporting 6G infrastructure.

Congratulations, Class of 2025!

UW ECE offers our congratulations to the graduating Class of 2025. We wish you all the best for the future!

UW ECE Graduation will feature two student speakers

Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) and Devan Perkash (BSECE ‘25) will speak at this year’s Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m.

Thy Tran from Micron Technology to speak at UW ECE Graduation

UW ECE alumna Thy Tran (BSEE ‘93) will be honored guest speaker for the 2025 UW ECE Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m.

Unlocking the brain with the fruit fly

UW ECE undergraduate Mary Bun studies multitasking in fruit flies to offer valuable insights into disorders like Parkinson’s disease. Her work is inspired by neural engineering research led by UW ECE and UW Medicine Professor Chet Moritz.

UW ECE doctoral students Marziyeh Rezaei (left) and Pengyu Zeng (right) have been named recipients of a 2025 Qualcomm Innovation Fellowship. They are conducting research aimed at enabling scalable, power-efficient optical links for the next generation of edge-cloud data centers supporting 6G infrastructure.[/caption]

Qualcomm, a multinational corporation that creates microchips, software, and solutions related to wireless technology, recently announced that two UW ECE doctoral students will receive a 2025 Qualcomm Innovation Fellowship. These students are among only 34 recipients of this prestigious award in North America. The Fellowship program is focused on recognizing, rewarding, and mentoring graduate students conducting a broad range of technical research, based on Qualcomm’s core values of innovation, execution, and teamwork.

Fellowship recipients Marziyeh Rezaei and Pengyu Zeng are both doctoral students at UW ECE advised by Assistant Professor Sajjad Moazeni. In Moazeni’s lab, Rezaei and Zeng conduct research at the intersection of integrated system design and photonics, with applications in computing and communication, sensing and imaging, and the life sciences.

The Fellowship program empowers graduate students like Rezaei and Zeng to take giant steps toward achieving their research goals. Students from top American and international universities are encouraged to apply and submit a proposal on any innovative idea of their choosing. Winning students earn a one-year fellowship and are mentored by Qualcomm engineers to facilitate the success of the proposed research project.

UW ECE doctoral students Marziyeh Rezaei (left) and Pengyu Zeng (right) have been named recipients of a 2025 Qualcomm Innovation Fellowship. They are conducting research aimed at enabling scalable, power-efficient optical links for the next generation of edge-cloud data centers supporting 6G infrastructure.[/caption]

Qualcomm, a multinational corporation that creates microchips, software, and solutions related to wireless technology, recently announced that two UW ECE doctoral students will receive a 2025 Qualcomm Innovation Fellowship. These students are among only 34 recipients of this prestigious award in North America. The Fellowship program is focused on recognizing, rewarding, and mentoring graduate students conducting a broad range of technical research, based on Qualcomm’s core values of innovation, execution, and teamwork.

Fellowship recipients Marziyeh Rezaei and Pengyu Zeng are both doctoral students at UW ECE advised by Assistant Professor Sajjad Moazeni. In Moazeni’s lab, Rezaei and Zeng conduct research at the intersection of integrated system design and photonics, with applications in computing and communication, sensing and imaging, and the life sciences.

The Fellowship program empowers graduate students like Rezaei and Zeng to take giant steps toward achieving their research goals. Students from top American and international universities are encouraged to apply and submit a proposal on any innovative idea of their choosing. Winning students earn a one-year fellowship and are mentored by Qualcomm engineers to facilitate the success of the proposed research project.

“Marziyeh and Pengyu are developing the next generation of coherent optics for connectivity, from data centers to 6G networks. I truly enjoy working with students who take on challenging, real-world problems, and I’m proud of them!”

— UW ECE Assistant Professor Sajjad Moazeni

Rezaei and Zeng proposed developing technology that connects tens of thousands of computer processor chips in a data center with light moving through optical fibers, which is almost lossless compared to conventional electrical signals in copper wire. Their novel approach includes making use of ultra-low power analog circuits to replace conventional, power-hungry components in optical links. Both Rezaei and Zeng will be actively involved in the design, implementation, and testing of the proposed solution, each contributing their expertise to different core components of the system. They will be working under Moazeni’s guidance, along with mentorship from Qualcomm engineers Bhushan Asuri and Mali Nagarajan.

This new and improved technology could enable scalable, power-efficient optical links for the next generation of edge-cloud data centers supporting 6G infrastructure. Power-efficient, high-throughput optical interconnects are critical for 6G networks, as global mobile data traffic is expected to exceed 1,000 exabytes per month by 2030, demanding optical links that can support terabit-per-second speeds while maintaining low energy consumption for sustainable operation. Rezaei and Zeng’s proposed coherent optical links provide an innovative way to address the scalability, speed, and energy-efficiency limitations of today’s optical interconnects.

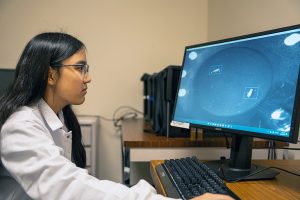

Marziyeh Rezaei

[caption id="attachment_38229" align="alignright" width="300"] UW ECE graduate student Marziyeh Rezaei[/caption]

Marziyeh Rezaei received her bachelor’s degree in electrical engineering with a focus on electronic integrated circuit design in 2020 from Sharif University of Technology in Tehran, Iran. She worked as a research assistant in the Robotics, Perception and AI Lab at the Chinese University of Hong Kong during summer 2019 and at NVIDIA in summer 2024. Currently, she is a fourth-year doctoral student at UW ECE. In 2022, she was awarded a Diversity in Technology Scholarship from Cadence corporation for her outstanding leadership skills, academic achievement, and drive to shape the world of technology. Her prior research includes exploring the feasibility and advantages of proposed coherent links for intra-data center applications, which can also be extended to fronthaul and midhaul interconnects.

“Winning this fellowship is a meaningful recognition of the work I’ve poured into my doctoral degree. It feels incredibly rewarding to have the excitement, dedication, and effort I’ve invested in this project acknowledged,” Rezaei said. “Achieving this goal before graduation has been a dream come true, and this support will help us bring our proposal to life and demonstrate a working proof of concept to the research community.”

UW ECE graduate student Marziyeh Rezaei[/caption]

Marziyeh Rezaei received her bachelor’s degree in electrical engineering with a focus on electronic integrated circuit design in 2020 from Sharif University of Technology in Tehran, Iran. She worked as a research assistant in the Robotics, Perception and AI Lab at the Chinese University of Hong Kong during summer 2019 and at NVIDIA in summer 2024. Currently, she is a fourth-year doctoral student at UW ECE. In 2022, she was awarded a Diversity in Technology Scholarship from Cadence corporation for her outstanding leadership skills, academic achievement, and drive to shape the world of technology. Her prior research includes exploring the feasibility and advantages of proposed coherent links for intra-data center applications, which can also be extended to fronthaul and midhaul interconnects.

“Winning this fellowship is a meaningful recognition of the work I’ve poured into my doctoral degree. It feels incredibly rewarding to have the excitement, dedication, and effort I’ve invested in this project acknowledged,” Rezaei said. “Achieving this goal before graduation has been a dream come true, and this support will help us bring our proposal to life and demonstrate a working proof of concept to the research community.”

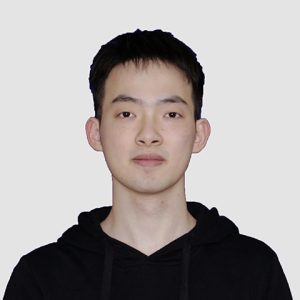

Pengyu Zeng

[caption id="attachment_38230" align="alignright" width="300"] UW ECE graduate student Pengyu Zeng[/caption]

Pengyu Zeng is a second-year doctoral student at UW ECE. He received his bachelor’s degree in electronic information engineering in 2023 from Wuhan University in Wuhan, China, with a focus on analog circuits design. At UW ECE, his research focuses on analog and mixed-signal circuits design for high-speed optical transceivers. His current research project is developing ultra-low power co-packaged optical interconnects based on silicon photonics.

“Receiving this fellowship is a meaningful recognition of the value of our research,” Zeng said. “The financial and technical support enable us to further advance this innovative project with greater focus and motivation.”

Learn more about the Qualcomm Innovation Fellowship program on the company’s website.

[post_title] => UW ECE graduate students receive Qualcomm Innovation Fellowship

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => 2025-qualcomm-innovation-fellowship

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-16 08:17:01

[post_modified_gmt] => 2025-06-16 15:17:01

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38224

[menu_order] => 2

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[2] => WP_Post Object

(

[ID] => 38200

[post_author] => 51

[post_date] => 2025-06-09 15:40:58

[post_date_gmt] => 2025-06-09 22:40:58

[post_content] =>

[post_title] => Congratulations, Class of 2025!

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => https-www-ece-uw-edu-news-events-graduation

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-09 16:00:34

[post_modified_gmt] => 2025-06-09 23:00:34

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38200

[menu_order] => 3

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[3] => WP_Post Object

(

[ID] => 37959

[post_author] => 27

[post_date] => 2025-06-02 10:26:16

[post_date_gmt] => 2025-06-02 17:26:16

[post_content] => [caption id="attachment_37963" align="alignright" width="600"]

UW ECE graduate student Pengyu Zeng[/caption]

Pengyu Zeng is a second-year doctoral student at UW ECE. He received his bachelor’s degree in electronic information engineering in 2023 from Wuhan University in Wuhan, China, with a focus on analog circuits design. At UW ECE, his research focuses on analog and mixed-signal circuits design for high-speed optical transceivers. His current research project is developing ultra-low power co-packaged optical interconnects based on silicon photonics.

“Receiving this fellowship is a meaningful recognition of the value of our research,” Zeng said. “The financial and technical support enable us to further advance this innovative project with greater focus and motivation.”

Learn more about the Qualcomm Innovation Fellowship program on the company’s website.

[post_title] => UW ECE graduate students receive Qualcomm Innovation Fellowship

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => 2025-qualcomm-innovation-fellowship

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-16 08:17:01

[post_modified_gmt] => 2025-06-16 15:17:01

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38224

[menu_order] => 2

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[2] => WP_Post Object

(

[ID] => 38200

[post_author] => 51

[post_date] => 2025-06-09 15:40:58

[post_date_gmt] => 2025-06-09 22:40:58

[post_content] =>

[post_title] => Congratulations, Class of 2025!

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => https-www-ece-uw-edu-news-events-graduation

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-09 16:00:34

[post_modified_gmt] => 2025-06-09 23:00:34

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38200

[menu_order] => 3

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[3] => WP_Post Object

(

[ID] => 37959

[post_author] => 27

[post_date] => 2025-06-02 10:26:16

[post_date_gmt] => 2025-06-02 17:26:16

[post_content] => [caption id="attachment_37963" align="alignright" width="600"] Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) and Devan Perkash (BSECE ‘25) will speak at this year’s Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. Photo of Nivii Kalavakonda by Ryan Hoover / UW ECE[/caption]

The University of Washington Department of Electrical & Computer Engineering is proud to announce that in addition to UW ECE alumna Thy Tran (BSEE ‘93), two outstanding students have been selected to speak at our 2025 Graduation Ceremony. Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) will offer remarks on behalf of graduate students, and Devan Perkash (BSECE ‘25) will represent undergraduates. Kalavakonda and Perkash were selected for this honor because of their academic achievements, extracurricular activities, and service to the Department. This year’s Graduation Ceremony will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. The event will be presided over by UW ECE Professor and Chair Eric Klavins.

“I am thrilled to have such fine examples of students from our graduating class to be speaking at this year’s Graduation Ceremony,” Klavins said. “Nivii exemplifies going above and beyond, not only in her research, but also in her service and leadership roles outside of the classroom. I also think that Devan’s entrepreneurial activity and enthusiasm for using technology to better people’s lives is a great example of our students’ potential to impact the world in a positive way.”

Learn more about both students below.

Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) and Devan Perkash (BSECE ‘25) will speak at this year’s Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. Photo of Nivii Kalavakonda by Ryan Hoover / UW ECE[/caption]

The University of Washington Department of Electrical & Computer Engineering is proud to announce that in addition to UW ECE alumna Thy Tran (BSEE ‘93), two outstanding students have been selected to speak at our 2025 Graduation Ceremony. Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) will offer remarks on behalf of graduate students, and Devan Perkash (BSECE ‘25) will represent undergraduates. Kalavakonda and Perkash were selected for this honor because of their academic achievements, extracurricular activities, and service to the Department. This year’s Graduation Ceremony will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. The event will be presided over by UW ECE Professor and Chair Eric Klavins.

“I am thrilled to have such fine examples of students from our graduating class to be speaking at this year’s Graduation Ceremony,” Klavins said. “Nivii exemplifies going above and beyond, not only in her research, but also in her service and leadership roles outside of the classroom. I also think that Devan’s entrepreneurial activity and enthusiasm for using technology to better people’s lives is a great example of our students’ potential to impact the world in a positive way.”

Learn more about both students below.

Graduate student speaker

Niveditha (Nivii) Kalavakonda

(Ph.D. EE ‘25)

[caption id="attachment_37969" align="alignright" width="400"] Niveditha (Nivii) Kalavakonda (Ph.D. EE '25)[/caption]

Nivii Kalavakonda is graduating with a doctoral degree in electrical engineering. Her research at UW ECE focused on developing an assistive robot for surgical suction that works cooperatively with a surgeon. She was advised by Professor Blake Hannaford and worked closely with Dr. Laligam Sekhar in UW Medicine. Kalavakonda was also part of the Science, Technology, and Society Studies program at the UW. She has held internships at Amazon, Apple, and NVIDIA.

Kalavakonda has received the Yang Outstanding Doctoral Student Award, the UW ECE Student Impact Award, and an Amazon Catalyst fellowship. She was selected to be part of the UW’s Husky 100, and she has been broadly recognized as part of the Robotics Science and Systems Pioneers and Electrical Engineering and Computer Science Rising Stars cohorts.

Kalavakonda was a predoctoral instructor for the ECE 543 Models of Robot Manipulation course. With the intention of supporting student wellness and success, Kalavakonda has also co-founded several initiatives at UW ECE, such as the Student Advisory Council; the Diversity, Equity, and Inclusion Committee; and the Future Faculty Preparation Program. After graduating, Kalavakonda will be seeking an academic position, where she hopes to continue working with students on robotics.

Niveditha (Nivii) Kalavakonda (Ph.D. EE '25)[/caption]

Nivii Kalavakonda is graduating with a doctoral degree in electrical engineering. Her research at UW ECE focused on developing an assistive robot for surgical suction that works cooperatively with a surgeon. She was advised by Professor Blake Hannaford and worked closely with Dr. Laligam Sekhar in UW Medicine. Kalavakonda was also part of the Science, Technology, and Society Studies program at the UW. She has held internships at Amazon, Apple, and NVIDIA.

Kalavakonda has received the Yang Outstanding Doctoral Student Award, the UW ECE Student Impact Award, and an Amazon Catalyst fellowship. She was selected to be part of the UW’s Husky 100, and she has been broadly recognized as part of the Robotics Science and Systems Pioneers and Electrical Engineering and Computer Science Rising Stars cohorts.

Kalavakonda was a predoctoral instructor for the ECE 543 Models of Robot Manipulation course. With the intention of supporting student wellness and success, Kalavakonda has also co-founded several initiatives at UW ECE, such as the Student Advisory Council; the Diversity, Equity, and Inclusion Committee; and the Future Faculty Preparation Program. After graduating, Kalavakonda will be seeking an academic position, where she hopes to continue working with students on robotics.

Undergraduate student speaker

Devan Perkash

(BSECE ‘25)

[caption id="attachment_37971" align="alignright" width="400"] Devan Perkash (BSECE '25)[/caption]

Devan Perkash is graduating with a bachelor’s degree in electrical and computer engineering. At the UW, he specialized in machine learning and computer architecture, gaining practical experience through coursework and internships. His senior capstone project, done in partnership with Amazon, focused on quantizing large language models to run efficiently on edge devices, such as smartphones and laptops, bringing advanced artificial intelligence closer to end-users.

Perkash strongly believes that technology’s primary value lies in solving practical, real-world challenges. This belief fuels his passion for technological entrepreneurship. He took an active leadership role at the UW, serving as president of the Lavin Entrepreneurship Program, where he led over a hundred student entrepreneurs and managed resources dedicated to fostering successful startups. He also co-founded the UW Venture Capital Club, aiming to democratize access to venture capital knowledge and networks through initiatives, such as hosting speaker series with top Seattle-based venture capitalists.

After graduation, Perkash plans to deepen his knowledge by pursuing a master’s degree in electrical engineering and computer science at the University of California, Berkeley. There, he aims to gain deeper technical knowledge and entrepreneurial experience, preparing him to create technology solutions that positively impact people’s lives.

[post_title] => UW ECE Graduation will feature two student speakers

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => uw-ece-graduation-will-feature-two-student-speakers

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-02 10:26:16

[post_modified_gmt] => 2025-06-02 17:26:16

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=37959

[menu_order] => 4

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[4] => WP_Post Object

(

[ID] => 37944

[post_author] => 27

[post_date] => 2025-05-19 10:39:04

[post_date_gmt] => 2025-05-19 17:39:04

[post_content] => [caption id="attachment_37945" align="alignright" width="475"]

Devan Perkash (BSECE '25)[/caption]

Devan Perkash is graduating with a bachelor’s degree in electrical and computer engineering. At the UW, he specialized in machine learning and computer architecture, gaining practical experience through coursework and internships. His senior capstone project, done in partnership with Amazon, focused on quantizing large language models to run efficiently on edge devices, such as smartphones and laptops, bringing advanced artificial intelligence closer to end-users.

Perkash strongly believes that technology’s primary value lies in solving practical, real-world challenges. This belief fuels his passion for technological entrepreneurship. He took an active leadership role at the UW, serving as president of the Lavin Entrepreneurship Program, where he led over a hundred student entrepreneurs and managed resources dedicated to fostering successful startups. He also co-founded the UW Venture Capital Club, aiming to democratize access to venture capital knowledge and networks through initiatives, such as hosting speaker series with top Seattle-based venture capitalists.

After graduation, Perkash plans to deepen his knowledge by pursuing a master’s degree in electrical engineering and computer science at the University of California, Berkeley. There, he aims to gain deeper technical knowledge and entrepreneurial experience, preparing him to create technology solutions that positively impact people’s lives.

[post_title] => UW ECE Graduation will feature two student speakers

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => uw-ece-graduation-will-feature-two-student-speakers

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-02 10:26:16

[post_modified_gmt] => 2025-06-02 17:26:16

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=37959

[menu_order] => 4

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[4] => WP_Post Object

(

[ID] => 37944

[post_author] => 27

[post_date] => 2025-05-19 10:39:04

[post_date_gmt] => 2025-05-19 17:39:04

[post_content] => [caption id="attachment_37945" align="alignright" width="475"] UW ECE alumna Thy Tran (BSEE ‘93) will be honored guest speaker for the 2025 UW ECE Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. Tran is Vice President of Global Frontend Procurement at Micron Technology.[/caption]

The University of Washington Department of Electrical & Computer Engineering is proud to welcome UW ECE alumna Thy Tran (BSEE ‘93) as honored guest speaker for our 2025 Graduation Ceremony. Tran is Vice President of Global Frontend Procurement at Micron Technology, a worldwide leader in the semiconductor industry that specializes in computer memory and storage solutions. She recently transitioned from her prior role as vice president of DRAM Process Integration, where she led a global team in the United States and Asia to drive DRAM (dynamic random-access memory) technology development and transfer into high-volume manufacturing fabrication facilities. Tran has over 30 years of semiconductor experience working in the United States, Europe, and Asia, including leading roles at two semiconductor fabrication facility startups. This year’s Graduation Ceremony will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. The event will be presided over by UW ECE Professor and Chair Eric Klavins.

“We are looking forward to having Thy as our honored guest speaker at Graduation this year,” Klavins said. “She has had a long and successful career in the semiconductor industry and is an international leader in her field. She understands the value of resilience and persistence first-hand, and I know there is much she can share with our graduates at this event.”

Tran joined Micron in 2008 and led several DRAM module development programs, including advanced capacitor, metallization, and through-silicon-via, or TSV, integration before taking on the DRAM Process Integration leadership role for several product generations. Her technical contribution has been integral to Micron’s DRAM Technology Development Roadmap and played a significant role in helping Micron achieve DRAM technology leadership. Prior to Micron, Tran worked on logic and SRAM (static random-access memory) technologies at Motorola and SRAM at WaferTech, now known as TSMC Washington. She also worked at Siemens (which later spun off Infineon and Qimonda), where she held several leadership roles in DRAM technology development transfer, and manufacturing.

In addition to receiving her bachelor’s degree from UW ECE, Tran is a recent alumna of the Stanford Graduate School of Business’s Executive Program and the McKinsey Executive Leadership Program. She is a senior member of the Institute of Electrical and Electronics Engineers, known as IEEE, and a member of the Society of Women Engineers. She also serves as a strategic advisory board member for UW ECE as well as for the International Semiconductor Executive Summit and Mercado Global. She is a recipient of Global Semiconductor Alliance’s 2023 Rising Women of Influence award.

[post_title] => Thy Tran from Micron Technology to speak at UW ECE Graduation

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => 2025-graduation-thy-tran

[to_ping] =>

[pinged] =>

[post_modified] => 2025-05-19 10:40:12

[post_modified_gmt] => 2025-05-19 17:40:12

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=37944

[menu_order] => 5

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[5] => WP_Post Object

(

[ID] => 37903

[post_author] => 27

[post_date] => 2025-05-09 09:27:47

[post_date_gmt] => 2025-05-09 16:27:47

[post_content] => Adapted from an article by Danielle Holland, UW Undergraduate Academic Affairs / Photos by Jayden Becles, University of Washington

[caption id="attachment_37906" align="alignright" width="600"]

UW ECE alumna Thy Tran (BSEE ‘93) will be honored guest speaker for the 2025 UW ECE Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. Tran is Vice President of Global Frontend Procurement at Micron Technology.[/caption]

The University of Washington Department of Electrical & Computer Engineering is proud to welcome UW ECE alumna Thy Tran (BSEE ‘93) as honored guest speaker for our 2025 Graduation Ceremony. Tran is Vice President of Global Frontend Procurement at Micron Technology, a worldwide leader in the semiconductor industry that specializes in computer memory and storage solutions. She recently transitioned from her prior role as vice president of DRAM Process Integration, where she led a global team in the United States and Asia to drive DRAM (dynamic random-access memory) technology development and transfer into high-volume manufacturing fabrication facilities. Tran has over 30 years of semiconductor experience working in the United States, Europe, and Asia, including leading roles at two semiconductor fabrication facility startups. This year’s Graduation Ceremony will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. The event will be presided over by UW ECE Professor and Chair Eric Klavins.

“We are looking forward to having Thy as our honored guest speaker at Graduation this year,” Klavins said. “She has had a long and successful career in the semiconductor industry and is an international leader in her field. She understands the value of resilience and persistence first-hand, and I know there is much she can share with our graduates at this event.”

Tran joined Micron in 2008 and led several DRAM module development programs, including advanced capacitor, metallization, and through-silicon-via, or TSV, integration before taking on the DRAM Process Integration leadership role for several product generations. Her technical contribution has been integral to Micron’s DRAM Technology Development Roadmap and played a significant role in helping Micron achieve DRAM technology leadership. Prior to Micron, Tran worked on logic and SRAM (static random-access memory) technologies at Motorola and SRAM at WaferTech, now known as TSMC Washington. She also worked at Siemens (which later spun off Infineon and Qimonda), where she held several leadership roles in DRAM technology development transfer, and manufacturing.

In addition to receiving her bachelor’s degree from UW ECE, Tran is a recent alumna of the Stanford Graduate School of Business’s Executive Program and the McKinsey Executive Leadership Program. She is a senior member of the Institute of Electrical and Electronics Engineers, known as IEEE, and a member of the Society of Women Engineers. She also serves as a strategic advisory board member for UW ECE as well as for the International Semiconductor Executive Summit and Mercado Global. She is a recipient of Global Semiconductor Alliance’s 2023 Rising Women of Influence award.

[post_title] => Thy Tran from Micron Technology to speak at UW ECE Graduation

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => 2025-graduation-thy-tran

[to_ping] =>

[pinged] =>

[post_modified] => 2025-05-19 10:40:12

[post_modified_gmt] => 2025-05-19 17:40:12

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=37944

[menu_order] => 5

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[5] => WP_Post Object

(

[ID] => 37903

[post_author] => 27

[post_date] => 2025-05-09 09:27:47

[post_date_gmt] => 2025-05-09 16:27:47

[post_content] => Adapted from an article by Danielle Holland, UW Undergraduate Academic Affairs / Photos by Jayden Becles, University of Washington

[caption id="attachment_37906" align="alignright" width="600"] UW ECE undergraduate Mary Bun studies multitasking in fruit flies to offer valuable insights into disorders like Parkinson’s disease. Her work is inspired by neural engineering research led by UW ECE Professor Chet Moritz, who holds joint appointments in UW Medicine.[/caption]

Mary Bun selects a three-day-old Drosophila fruit fly from the incubator and moves to her custom-built behavioral rig. She places the fly in a circular arena beneath a hidden camera and pulls the cloth curtain shut.

The rig’s design is both elegant and practical, featuring a black box, a top-down camera for video capture and a custom computer program Bun coded to control the experiment. With the lights off in the Ahmed Lab, the black box blocks any external light. Bred for this experiment, the fly’s neurons are activated by light, and even the slightest outside interference could skew the results. With a click, a red light triggers the neurons, causing the fly’s wings to extend. The camera captures the motion, measuring each subtle angle as the wings vibrate and contract.

Seated at her workstation, Bun watches the footage stream on her computer, her software controlling both the camera and the light stimulation. The rig’s sleek design, a product of her engineering expertise, reflects her dedication. “Every part of this setup has a purpose,” she says, her eyes fixed on the fly’s delicate movements. “This is a platform for discovery. There’s so much more to uncover.”

[caption id="attachment_37911" align="alignleft" width="400"]

UW ECE undergraduate Mary Bun studies multitasking in fruit flies to offer valuable insights into disorders like Parkinson’s disease. Her work is inspired by neural engineering research led by UW ECE Professor Chet Moritz, who holds joint appointments in UW Medicine.[/caption]

Mary Bun selects a three-day-old Drosophila fruit fly from the incubator and moves to her custom-built behavioral rig. She places the fly in a circular arena beneath a hidden camera and pulls the cloth curtain shut.

The rig’s design is both elegant and practical, featuring a black box, a top-down camera for video capture and a custom computer program Bun coded to control the experiment. With the lights off in the Ahmed Lab, the black box blocks any external light. Bred for this experiment, the fly’s neurons are activated by light, and even the slightest outside interference could skew the results. With a click, a red light triggers the neurons, causing the fly’s wings to extend. The camera captures the motion, measuring each subtle angle as the wings vibrate and contract.

Seated at her workstation, Bun watches the footage stream on her computer, her software controlling both the camera and the light stimulation. The rig’s sleek design, a product of her engineering expertise, reflects her dedication. “Every part of this setup has a purpose,” she says, her eyes fixed on the fly’s delicate movements. “This is a platform for discovery. There’s so much more to uncover.”

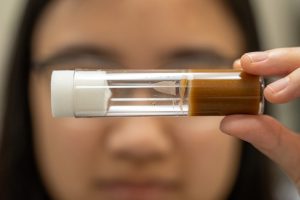

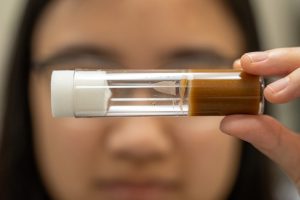

[caption id="attachment_37911" align="alignleft" width="400"] A test tube containing one of the fruit flies Mary Bun studies in the Ahmed Lab[/caption]

Mary Bun’s fascination with how things work began long before middle school. Driven by a natural curiosity for problem-solving, she knew early on that, like her brothers, she would apply to the Transition School at the University of Washington’s Robinson Center, the Center’s one-year college preparatory program for high-achieving students.

“I wanted a challenge,” Bun recalls. “The traditional high school experience didn’t feel like it would push me enough. I needed something more.” The Transition School provided an immersive environment with advanced coursework, allowing her to transition early to the UW. “I could move quickly and start thinking about research much earlier.”

Bun’s time wasn’t just academic — it helped her find a community of other highly motivated peers. “Fifteen is such a critical period in your life,” she says. As she began her college journey, “the world had been turned upside down” with the coronavirus pandemic. During the pandemic, Bun found support in her cohort, navigating the challenges of remote learning and isolation together. “The Transition School gave me the tools to succeed, but it was the people who made it meaningful.”

A test tube containing one of the fruit flies Mary Bun studies in the Ahmed Lab[/caption]

Mary Bun’s fascination with how things work began long before middle school. Driven by a natural curiosity for problem-solving, she knew early on that, like her brothers, she would apply to the Transition School at the University of Washington’s Robinson Center, the Center’s one-year college preparatory program for high-achieving students.

“I wanted a challenge,” Bun recalls. “The traditional high school experience didn’t feel like it would push me enough. I needed something more.” The Transition School provided an immersive environment with advanced coursework, allowing her to transition early to the UW. “I could move quickly and start thinking about research much earlier.”

Bun’s time wasn’t just academic — it helped her find a community of other highly motivated peers. “Fifteen is such a critical period in your life,” she says. As she began her college journey, “the world had been turned upside down” with the coronavirus pandemic. During the pandemic, Bun found support in her cohort, navigating the challenges of remote learning and isolation together. “The Transition School gave me the tools to succeed, but it was the people who made it meaningful.”

A spark for neural engineering

[caption id="attachment_37916" align="alignright" width="600"] Bun works on the rig she built for her research, left, and, right, the rig is ready to roll. She uses optogenetics (a technique that uses light to activate specific neurons) to study how fruit flies perform tasks like walking and vibrating their wings at the same time.[/caption]

In an Engineering 101 course, Bun was captivated by UW ECE Professor Chet Moritz’s work on neural stimulation devices for spinal cord injury rehabilitation. “I found it fascinating that we could externally influence the nervous system to help people,” she says.

Driven by this newfound passion, Bun pursued a double degree in electrical engineering and psychology. As a junior, she took on her first research opportunity in the lab of Dr. Sama Ahmed, where she applied her academic knowledge alongside her practical engineering skills.

“My first two years were about finding my footing,” she recalls. “But once I joined the lab, everything clicked. I realized how much I loved the process of discovery — asking questions, designing experiments and seeing results come to life.”

Bun’s research in the Ahmed Lab centers on an important question: How do neural circuits manage multitasking? Using optogenetics — a technique that uses light to activate specific neurons — she studies how fruit flies perform tasks like walking and vibrating their wings at the same time.

“Despite its simplicity, the fruit fly can perform surprisingly complex behaviors,” Bun explains. “By understanding how the fly brain processes multiple tasks, we can start to uncover fundamental principles about how more complex brains, like ours, might work.

Bun works on the rig she built for her research, left, and, right, the rig is ready to roll. She uses optogenetics (a technique that uses light to activate specific neurons) to study how fruit flies perform tasks like walking and vibrating their wings at the same time.[/caption]

In an Engineering 101 course, Bun was captivated by UW ECE Professor Chet Moritz’s work on neural stimulation devices for spinal cord injury rehabilitation. “I found it fascinating that we could externally influence the nervous system to help people,” she says.

Driven by this newfound passion, Bun pursued a double degree in electrical engineering and psychology. As a junior, she took on her first research opportunity in the lab of Dr. Sama Ahmed, where she applied her academic knowledge alongside her practical engineering skills.

“My first two years were about finding my footing,” she recalls. “But once I joined the lab, everything clicked. I realized how much I loved the process of discovery — asking questions, designing experiments and seeing results come to life.”

Bun’s research in the Ahmed Lab centers on an important question: How do neural circuits manage multitasking? Using optogenetics — a technique that uses light to activate specific neurons — she studies how fruit flies perform tasks like walking and vibrating their wings at the same time.

“Despite its simplicity, the fruit fly can perform surprisingly complex behaviors,” Bun explains. “By understanding how the fly brain processes multiple tasks, we can start to uncover fundamental principles about how more complex brains, like ours, might work.

Designing pathways

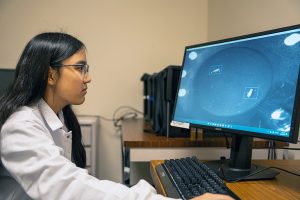

[caption id="attachment_37920" align="alignright" width="400"] Bun reviews the movement she captured with her self-made rig. She studies multitasking in fruit flies to learn more about complex movement in people.[/caption]

Under Dr. Ahmed’s guidance, Bun began constructing her behavior rig, a device she designed and built from scratch to observe and analyze fly behavior. The rig integrates hardware and software to capture high-speed video of flies responding to light stimulation, enabling Bun to measure precise movements, like wing extension and walking patterns. “Building the rig was one of the most rewarding parts of my research,” she says. “It allowed me to apply my engineering skills and coursework to solve a real scientific problem.”

Bun’s work challenges the traditional approach of studying behaviors in isolation. “Most research looks at one behavior at a time,” she says. “But in the real world, animals — and humans — are constantly juggling multiple tasks within different states and environments. I wanted to explore how the brain handles that.”

Through the Office of Undergraduate Research, Bun received support in identifying funding opportunities for her innovative research. With their assistance, she applied for and was awarded the Mary Gates Research Scholarship in 2023 and the 2024-25 Levinson Emerging Scholars Award. This prestigious award supports students conducting creative research projects in biosciences under the guidance of UW faculty and recognizes scholars who demonstrate exceptional motivation and independence in their research. Bun is also a 2024-25 recipient of the Stephanie Subak Endowed Memorial Scholarship from the Department of Electrical and Computer Engineering.

Bun reviews the movement she captured with her self-made rig. She studies multitasking in fruit flies to learn more about complex movement in people.[/caption]

Under Dr. Ahmed’s guidance, Bun began constructing her behavior rig, a device she designed and built from scratch to observe and analyze fly behavior. The rig integrates hardware and software to capture high-speed video of flies responding to light stimulation, enabling Bun to measure precise movements, like wing extension and walking patterns. “Building the rig was one of the most rewarding parts of my research,” she says. “It allowed me to apply my engineering skills and coursework to solve a real scientific problem.”

Bun’s work challenges the traditional approach of studying behaviors in isolation. “Most research looks at one behavior at a time,” she says. “But in the real world, animals — and humans — are constantly juggling multiple tasks within different states and environments. I wanted to explore how the brain handles that.”

Through the Office of Undergraduate Research, Bun received support in identifying funding opportunities for her innovative research. With their assistance, she applied for and was awarded the Mary Gates Research Scholarship in 2023 and the 2024-25 Levinson Emerging Scholars Award. This prestigious award supports students conducting creative research projects in biosciences under the guidance of UW faculty and recognizes scholars who demonstrate exceptional motivation and independence in their research. Bun is also a 2024-25 recipient of the Stephanie Subak Endowed Memorial Scholarship from the Department of Electrical and Computer Engineering.

Far-reaching impact

[caption id="attachment_37923" align="alignright" width="400"] Bun, in the red light of the rig she made to study multitasking in fruit flies.[/caption]

Bun’s research, which explores how the brain prioritizes and processes information during multitasking, has significant implications. By understanding how the brain seamlessly combines some behaviors, her work could offer valuable insights into disorders like Parkinson’s, which affect cognitive function, potentially paving the way for new treatment approaches.

Bun will present her research as a Levinson Scholar at the Office of Undergraduate Research’s 28th Annual Undergraduate Research Symposium. Her time with the Office of Undergraduate Research and in the Ahmed Lab has been transformative, fueling both her research and growth as a scientist.

“Dr. Ahmed gave me the freedom to take full ownership of my project,” Bun said. In the Ahmed Lab’s collaborative, non-hierarchical environment, undergraduates are treated as integral members of the team, and Bun has thrived in this setting. She designed the behavior rig from the ground up, conducted her own experiments and even began writing a paper on the methods the lab developed.

Building on this experience, Bun plans to pursue a Ph.D. to study neural engineering after graduation.

“Research has taught me to embrace challenges and think creatively,” she says. “It’s not just about finding answers — it’s about asking the right questions and pushing the boundaries of what we know.”

Learn more about biosystems research at UW ECE on the Biosystems webpage. The original version of this article is available on the UW Undergraduate Academic Affairs website.

[post_title] => Unlocking the brain with the fruit fly

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => unlocking-the-brain-with-the-fruit-fly

[to_ping] =>

[pinged] =>

[post_modified] => 2025-05-09 09:29:20

[post_modified_gmt] => 2025-05-09 16:29:20

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=37903

[menu_order] => 6

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

)

[post_count] => 6

[current_post] => -1

[before_loop] => 1

[in_the_loop] =>

[post] => WP_Post Object

(

[ID] => 38314

[post_author] => 27

[post_date] => 2025-06-23 14:34:54

[post_date_gmt] => 2025-06-23 21:34:54

[post_content] =>

[post_title] => Engineering research matters

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => engineering-research-matters

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-23 14:34:54

[post_modified_gmt] => 2025-06-23 21:34:54

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38314

[menu_order] => 1

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[comment_count] => 0

[current_comment] => -1

[found_posts] => 920

[max_num_pages] => 154

[max_num_comment_pages] => 0

[is_single] =>

[is_preview] =>

[is_page] =>

[is_archive] => 1

[is_date] =>

[is_year] =>

[is_month] =>

[is_day] =>

[is_time] =>

[is_author] =>

[is_category] =>

[is_tag] =>

[is_tax] =>

[is_search] =>

[is_feed] =>

[is_comment_feed] =>

[is_trackback] =>

[is_home] =>

[is_privacy_policy] =>

[is_404] =>

[is_embed] =>

[is_paged] =>

[is_admin] =>

[is_attachment] =>

[is_singular] =>

[is_robots] =>

[is_favicon] =>

[is_posts_page] =>

[is_post_type_archive] => 1

[query_vars_hash:WP_Query:private] => 259bd492f9be11f3568840d89049228d

[query_vars_changed:WP_Query:private] => 1

[thumbnails_cached] =>

[allow_query_attachment_by_filename:protected] =>

[stopwords:WP_Query:private] =>

[compat_fields:WP_Query:private] => Array

(

[0] => query_vars_hash

[1] => query_vars_changed

)

[compat_methods:WP_Query:private] => Array

(

[0] => init_query_flags

[1] => parse_tax_query

)

)

[_type:protected] => spotlight

[_from:protected] => newsawards_landing

[_args:protected] => Array

(

[post_type] => spotlight

[meta_query] => Array

(

[0] => Array

(

[key] => type

[value] => news

[compare] => LIKE

)

)

[posts_per_page] => 6

[post_status] => publish

)

[_jids:protected] =>

[_taxa:protected] => Array

(

)

[_meta:protected] => Array

(

[0] => Array

(

[key] => type

[value] => news

[compare] => LIKE

)

)

[_metarelation:protected] => AND

[_results:protected] => Array

(

[0] => WP_Post Object

(

[ID] => 38314

[post_author] => 27

[post_date] => 2025-06-23 14:34:54

[post_date_gmt] => 2025-06-23 21:34:54

[post_content] =>

[post_title] => Engineering research matters

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => engineering-research-matters

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-23 14:34:54

[post_modified_gmt] => 2025-06-23 21:34:54

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38314

[menu_order] => 1

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[1] => WP_Post Object

(

[ID] => 38224

[post_author] => 27

[post_date] => 2025-06-16 08:10:58

[post_date_gmt] => 2025-06-16 15:10:58

[post_content] => [caption id="attachment_38225" align="alignright" width="600"]

Bun, in the red light of the rig she made to study multitasking in fruit flies.[/caption]

Bun’s research, which explores how the brain prioritizes and processes information during multitasking, has significant implications. By understanding how the brain seamlessly combines some behaviors, her work could offer valuable insights into disorders like Parkinson’s, which affect cognitive function, potentially paving the way for new treatment approaches.

Bun will present her research as a Levinson Scholar at the Office of Undergraduate Research’s 28th Annual Undergraduate Research Symposium. Her time with the Office of Undergraduate Research and in the Ahmed Lab has been transformative, fueling both her research and growth as a scientist.

“Dr. Ahmed gave me the freedom to take full ownership of my project,” Bun said. In the Ahmed Lab’s collaborative, non-hierarchical environment, undergraduates are treated as integral members of the team, and Bun has thrived in this setting. She designed the behavior rig from the ground up, conducted her own experiments and even began writing a paper on the methods the lab developed.

Building on this experience, Bun plans to pursue a Ph.D. to study neural engineering after graduation.

“Research has taught me to embrace challenges and think creatively,” she says. “It’s not just about finding answers — it’s about asking the right questions and pushing the boundaries of what we know.”

Learn more about biosystems research at UW ECE on the Biosystems webpage. The original version of this article is available on the UW Undergraduate Academic Affairs website.

[post_title] => Unlocking the brain with the fruit fly

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => unlocking-the-brain-with-the-fruit-fly

[to_ping] =>

[pinged] =>

[post_modified] => 2025-05-09 09:29:20

[post_modified_gmt] => 2025-05-09 16:29:20

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=37903

[menu_order] => 6

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

)

[post_count] => 6

[current_post] => -1

[before_loop] => 1

[in_the_loop] =>

[post] => WP_Post Object

(

[ID] => 38314

[post_author] => 27

[post_date] => 2025-06-23 14:34:54

[post_date_gmt] => 2025-06-23 21:34:54

[post_content] =>

[post_title] => Engineering research matters

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => engineering-research-matters

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-23 14:34:54

[post_modified_gmt] => 2025-06-23 21:34:54

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38314

[menu_order] => 1

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[comment_count] => 0

[current_comment] => -1

[found_posts] => 920

[max_num_pages] => 154

[max_num_comment_pages] => 0

[is_single] =>

[is_preview] =>

[is_page] =>

[is_archive] => 1

[is_date] =>

[is_year] =>

[is_month] =>

[is_day] =>

[is_time] =>

[is_author] =>

[is_category] =>

[is_tag] =>

[is_tax] =>

[is_search] =>

[is_feed] =>

[is_comment_feed] =>

[is_trackback] =>

[is_home] =>

[is_privacy_policy] =>

[is_404] =>

[is_embed] =>

[is_paged] =>

[is_admin] =>

[is_attachment] =>

[is_singular] =>

[is_robots] =>

[is_favicon] =>

[is_posts_page] =>

[is_post_type_archive] => 1

[query_vars_hash:WP_Query:private] => 259bd492f9be11f3568840d89049228d

[query_vars_changed:WP_Query:private] => 1

[thumbnails_cached] =>

[allow_query_attachment_by_filename:protected] =>

[stopwords:WP_Query:private] =>

[compat_fields:WP_Query:private] => Array

(

[0] => query_vars_hash

[1] => query_vars_changed

)

[compat_methods:WP_Query:private] => Array

(

[0] => init_query_flags

[1] => parse_tax_query

)

)

[_type:protected] => spotlight

[_from:protected] => newsawards_landing

[_args:protected] => Array

(

[post_type] => spotlight

[meta_query] => Array

(

[0] => Array

(

[key] => type

[value] => news

[compare] => LIKE

)

)

[posts_per_page] => 6

[post_status] => publish

)

[_jids:protected] =>

[_taxa:protected] => Array

(

)

[_meta:protected] => Array

(

[0] => Array

(

[key] => type

[value] => news

[compare] => LIKE

)

)

[_metarelation:protected] => AND

[_results:protected] => Array

(

[0] => WP_Post Object

(

[ID] => 38314

[post_author] => 27

[post_date] => 2025-06-23 14:34:54

[post_date_gmt] => 2025-06-23 21:34:54

[post_content] =>

[post_title] => Engineering research matters

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => engineering-research-matters

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-23 14:34:54

[post_modified_gmt] => 2025-06-23 21:34:54

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38314

[menu_order] => 1

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[1] => WP_Post Object

(

[ID] => 38224

[post_author] => 27

[post_date] => 2025-06-16 08:10:58

[post_date_gmt] => 2025-06-16 15:10:58

[post_content] => [caption id="attachment_38225" align="alignright" width="600"] UW ECE doctoral students Marziyeh Rezaei (left) and Pengyu Zeng (right) have been named recipients of a 2025 Qualcomm Innovation Fellowship. They are conducting research aimed at enabling scalable, power-efficient optical links for the next generation of edge-cloud data centers supporting 6G infrastructure.[/caption]

Qualcomm, a multinational corporation that creates microchips, software, and solutions related to wireless technology, recently announced that two UW ECE doctoral students will receive a 2025 Qualcomm Innovation Fellowship. These students are among only 34 recipients of this prestigious award in North America. The Fellowship program is focused on recognizing, rewarding, and mentoring graduate students conducting a broad range of technical research, based on Qualcomm’s core values of innovation, execution, and teamwork.

Fellowship recipients Marziyeh Rezaei and Pengyu Zeng are both doctoral students at UW ECE advised by Assistant Professor Sajjad Moazeni. In Moazeni’s lab, Rezaei and Zeng conduct research at the intersection of integrated system design and photonics, with applications in computing and communication, sensing and imaging, and the life sciences.

The Fellowship program empowers graduate students like Rezaei and Zeng to take giant steps toward achieving their research goals. Students from top American and international universities are encouraged to apply and submit a proposal on any innovative idea of their choosing. Winning students earn a one-year fellowship and are mentored by Qualcomm engineers to facilitate the success of the proposed research project.

UW ECE doctoral students Marziyeh Rezaei (left) and Pengyu Zeng (right) have been named recipients of a 2025 Qualcomm Innovation Fellowship. They are conducting research aimed at enabling scalable, power-efficient optical links for the next generation of edge-cloud data centers supporting 6G infrastructure.[/caption]

Qualcomm, a multinational corporation that creates microchips, software, and solutions related to wireless technology, recently announced that two UW ECE doctoral students will receive a 2025 Qualcomm Innovation Fellowship. These students are among only 34 recipients of this prestigious award in North America. The Fellowship program is focused on recognizing, rewarding, and mentoring graduate students conducting a broad range of technical research, based on Qualcomm’s core values of innovation, execution, and teamwork.

Fellowship recipients Marziyeh Rezaei and Pengyu Zeng are both doctoral students at UW ECE advised by Assistant Professor Sajjad Moazeni. In Moazeni’s lab, Rezaei and Zeng conduct research at the intersection of integrated system design and photonics, with applications in computing and communication, sensing and imaging, and the life sciences.

The Fellowship program empowers graduate students like Rezaei and Zeng to take giant steps toward achieving their research goals. Students from top American and international universities are encouraged to apply and submit a proposal on any innovative idea of their choosing. Winning students earn a one-year fellowship and are mentored by Qualcomm engineers to facilitate the success of the proposed research project.

“Marziyeh and Pengyu are developing the next generation of coherent optics for connectivity, from data centers to 6G networks. I truly enjoy working with students who take on challenging, real-world problems, and I’m proud of them!”

— UW ECE Assistant Professor Sajjad Moazeni

Rezaei and Zeng proposed developing technology that connects tens of thousands of computer processor chips in a data center with light moving through optical fibers, which is almost lossless compared to conventional electrical signals in copper wire. Their novel approach includes making use of ultra-low power analog circuits to replace conventional, power-hungry components in optical links. Both Rezaei and Zeng will be actively involved in the design, implementation, and testing of the proposed solution, each contributing their expertise to different core components of the system. They will be working under Moazeni’s guidance, along with mentorship from Qualcomm engineers Bhushan Asuri and Mali Nagarajan.

This new and improved technology could enable scalable, power-efficient optical links for the next generation of edge-cloud data centers supporting 6G infrastructure. Power-efficient, high-throughput optical interconnects are critical for 6G networks, as global mobile data traffic is expected to exceed 1,000 exabytes per month by 2030, demanding optical links that can support terabit-per-second speeds while maintaining low energy consumption for sustainable operation. Rezaei and Zeng’s proposed coherent optical links provide an innovative way to address the scalability, speed, and energy-efficiency limitations of today’s optical interconnects.

Marziyeh Rezaei

[caption id="attachment_38229" align="alignright" width="300"] UW ECE graduate student Marziyeh Rezaei[/caption]

Marziyeh Rezaei received her bachelor’s degree in electrical engineering with a focus on electronic integrated circuit design in 2020 from Sharif University of Technology in Tehran, Iran. She worked as a research assistant in the Robotics, Perception and AI Lab at the Chinese University of Hong Kong during summer 2019 and at NVIDIA in summer 2024. Currently, she is a fourth-year doctoral student at UW ECE. In 2022, she was awarded a Diversity in Technology Scholarship from Cadence corporation for her outstanding leadership skills, academic achievement, and drive to shape the world of technology. Her prior research includes exploring the feasibility and advantages of proposed coherent links for intra-data center applications, which can also be extended to fronthaul and midhaul interconnects.

“Winning this fellowship is a meaningful recognition of the work I’ve poured into my doctoral degree. It feels incredibly rewarding to have the excitement, dedication, and effort I’ve invested in this project acknowledged,” Rezaei said. “Achieving this goal before graduation has been a dream come true, and this support will help us bring our proposal to life and demonstrate a working proof of concept to the research community.”

UW ECE graduate student Marziyeh Rezaei[/caption]

Marziyeh Rezaei received her bachelor’s degree in electrical engineering with a focus on electronic integrated circuit design in 2020 from Sharif University of Technology in Tehran, Iran. She worked as a research assistant in the Robotics, Perception and AI Lab at the Chinese University of Hong Kong during summer 2019 and at NVIDIA in summer 2024. Currently, she is a fourth-year doctoral student at UW ECE. In 2022, she was awarded a Diversity in Technology Scholarship from Cadence corporation for her outstanding leadership skills, academic achievement, and drive to shape the world of technology. Her prior research includes exploring the feasibility and advantages of proposed coherent links for intra-data center applications, which can also be extended to fronthaul and midhaul interconnects.

“Winning this fellowship is a meaningful recognition of the work I’ve poured into my doctoral degree. It feels incredibly rewarding to have the excitement, dedication, and effort I’ve invested in this project acknowledged,” Rezaei said. “Achieving this goal before graduation has been a dream come true, and this support will help us bring our proposal to life and demonstrate a working proof of concept to the research community.”

Pengyu Zeng

[caption id="attachment_38230" align="alignright" width="300"] UW ECE graduate student Pengyu Zeng[/caption]

Pengyu Zeng is a second-year doctoral student at UW ECE. He received his bachelor’s degree in electronic information engineering in 2023 from Wuhan University in Wuhan, China, with a focus on analog circuits design. At UW ECE, his research focuses on analog and mixed-signal circuits design for high-speed optical transceivers. His current research project is developing ultra-low power co-packaged optical interconnects based on silicon photonics.

“Receiving this fellowship is a meaningful recognition of the value of our research,” Zeng said. “The financial and technical support enable us to further advance this innovative project with greater focus and motivation.”

Learn more about the Qualcomm Innovation Fellowship program on the company’s website.

[post_title] => UW ECE graduate students receive Qualcomm Innovation Fellowship

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => 2025-qualcomm-innovation-fellowship

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-16 08:17:01

[post_modified_gmt] => 2025-06-16 15:17:01

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38224

[menu_order] => 2

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[2] => WP_Post Object

(

[ID] => 38200

[post_author] => 51

[post_date] => 2025-06-09 15:40:58

[post_date_gmt] => 2025-06-09 22:40:58

[post_content] =>

[post_title] => Congratulations, Class of 2025!

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => https-www-ece-uw-edu-news-events-graduation

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-09 16:00:34

[post_modified_gmt] => 2025-06-09 23:00:34

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38200

[menu_order] => 3

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[3] => WP_Post Object

(

[ID] => 37959

[post_author] => 27

[post_date] => 2025-06-02 10:26:16

[post_date_gmt] => 2025-06-02 17:26:16

[post_content] => [caption id="attachment_37963" align="alignright" width="600"]

UW ECE graduate student Pengyu Zeng[/caption]

Pengyu Zeng is a second-year doctoral student at UW ECE. He received his bachelor’s degree in electronic information engineering in 2023 from Wuhan University in Wuhan, China, with a focus on analog circuits design. At UW ECE, his research focuses on analog and mixed-signal circuits design for high-speed optical transceivers. His current research project is developing ultra-low power co-packaged optical interconnects based on silicon photonics.

“Receiving this fellowship is a meaningful recognition of the value of our research,” Zeng said. “The financial and technical support enable us to further advance this innovative project with greater focus and motivation.”

Learn more about the Qualcomm Innovation Fellowship program on the company’s website.

[post_title] => UW ECE graduate students receive Qualcomm Innovation Fellowship

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => 2025-qualcomm-innovation-fellowship

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-16 08:17:01

[post_modified_gmt] => 2025-06-16 15:17:01

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38224

[menu_order] => 2

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[2] => WP_Post Object

(

[ID] => 38200

[post_author] => 51

[post_date] => 2025-06-09 15:40:58

[post_date_gmt] => 2025-06-09 22:40:58

[post_content] =>

[post_title] => Congratulations, Class of 2025!

[post_excerpt] =>

[post_status] => publish

[comment_status] => closed

[ping_status] => closed

[post_password] =>

[post_name] => https-www-ece-uw-edu-news-events-graduation

[to_ping] =>

[pinged] =>

[post_modified] => 2025-06-09 16:00:34

[post_modified_gmt] => 2025-06-09 23:00:34

[post_content_filtered] =>

[post_parent] => 0

[guid] => https://www.ece.uw.edu/?post_type=spotlight&p=38200

[menu_order] => 3

[post_type] => spotlight

[post_mime_type] =>

[comment_count] => 0

[filter] => raw

)

[3] => WP_Post Object

(

[ID] => 37959

[post_author] => 27

[post_date] => 2025-06-02 10:26:16

[post_date_gmt] => 2025-06-02 17:26:16

[post_content] => [caption id="attachment_37963" align="alignright" width="600"] Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) and Devan Perkash (BSECE ‘25) will speak at this year’s Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. Photo of Nivii Kalavakonda by Ryan Hoover / UW ECE[/caption]

The University of Washington Department of Electrical & Computer Engineering is proud to announce that in addition to UW ECE alumna Thy Tran (BSEE ‘93), two outstanding students have been selected to speak at our 2025 Graduation Ceremony. Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) will offer remarks on behalf of graduate students, and Devan Perkash (BSECE ‘25) will represent undergraduates. Kalavakonda and Perkash were selected for this honor because of their academic achievements, extracurricular activities, and service to the Department. This year’s Graduation Ceremony will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. The event will be presided over by UW ECE Professor and Chair Eric Klavins.

“I am thrilled to have such fine examples of students from our graduating class to be speaking at this year’s Graduation Ceremony,” Klavins said. “Nivii exemplifies going above and beyond, not only in her research, but also in her service and leadership roles outside of the classroom. I also think that Devan’s entrepreneurial activity and enthusiasm for using technology to better people’s lives is a great example of our students’ potential to impact the world in a positive way.”

Learn more about both students below.

Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) and Devan Perkash (BSECE ‘25) will speak at this year’s Graduation Ceremony, which will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. Photo of Nivii Kalavakonda by Ryan Hoover / UW ECE[/caption]

The University of Washington Department of Electrical & Computer Engineering is proud to announce that in addition to UW ECE alumna Thy Tran (BSEE ‘93), two outstanding students have been selected to speak at our 2025 Graduation Ceremony. Niveditha (Nivii) Kalavakonda (Ph.D. EE ‘25) will offer remarks on behalf of graduate students, and Devan Perkash (BSECE ‘25) will represent undergraduates. Kalavakonda and Perkash were selected for this honor because of their academic achievements, extracurricular activities, and service to the Department. This year’s Graduation Ceremony will take place in the Alaska Airlines Arena at Hec Edmundson Pavilion on Wednesday, June 11, from 7 to 9 p.m. The event will be presided over by UW ECE Professor and Chair Eric Klavins.

“I am thrilled to have such fine examples of students from our graduating class to be speaking at this year’s Graduation Ceremony,” Klavins said. “Nivii exemplifies going above and beyond, not only in her research, but also in her service and leadership roles outside of the classroom. I also think that Devan’s entrepreneurial activity and enthusiasm for using technology to better people’s lives is a great example of our students’ potential to impact the world in a positive way.”

Learn more about both students below.

Graduate student speaker

Niveditha (Nivii) Kalavakonda

(Ph.D. EE ‘25)

[caption id="attachment_37969" align="alignright" width="400"] Niveditha (Nivii) Kalavakonda (Ph.D. EE '25)[/caption]

Nivii Kalavakonda is graduating with a doctoral degree in electrical engineering. Her research at UW ECE focused on developing an assistive robot for surgical suction that works cooperatively with a surgeon. She was advised by Professor Blake Hannaford and worked closely with Dr. Laligam Sekhar in UW Medicine. Kalavakonda was also part of the Science, Technology, and Society Studies program at the UW. She has held internships at Amazon, Apple, and NVIDIA.

Kalavakonda has received the Yang Outstanding Doctoral Student Award, the UW ECE Student Impact Award, and an Amazon Catalyst fellowship. She was selected to be part of the UW’s Husky 100, and she has been broadly recognized as part of the Robotics Science and Systems Pioneers and Electrical Engineering and Computer Science Rising Stars cohorts.

Kalavakonda was a predoctoral instructor for the ECE 543 Models of Robot Manipulation course. With the intention of supporting student wellness and success, Kalavakonda has also co-founded several initiatives at UW ECE, such as the Student Advisory Council; the Diversity, Equity, and Inclusion Committee; and the Future Faculty Preparation Program. After graduating, Kalavakonda will be seeking an academic position, where she hopes to continue working with students on robotics.

Niveditha (Nivii) Kalavakonda (Ph.D. EE '25)[/caption]